MemMachine

MemMachine is the open-source memory layer that makes AI agents learn and personalize over time.

Visit

About MemMachine

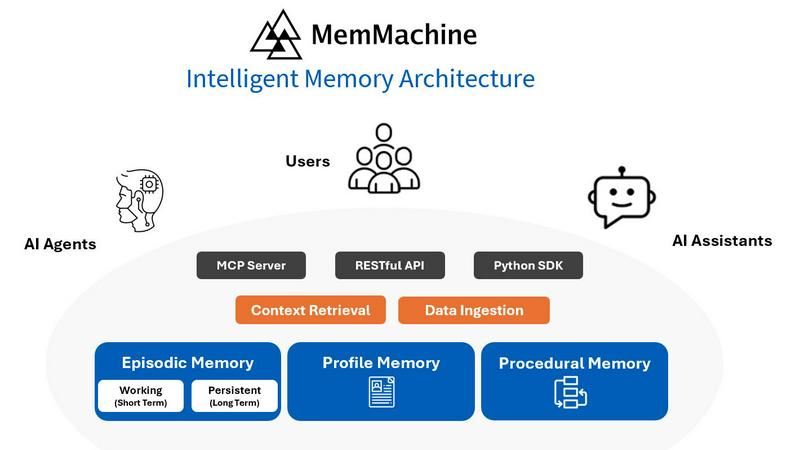

MemMachine is the foundational memory layer for the next generation of AI. It's an open-source solution that transforms static, forgetful AI agents and applications into intelligent, personalized assistants that learn and grow over time. In a landscape where most AI interactions start from zero, MemMachine provides the critical capability to persist context, preferences, and data across sessions. This allows developers to build agents that remember past conversations, user details, and interaction patterns, creating a sophisticated and evolving user profile that enriches every future interaction. It's designed for engineering teams and developers who are building serious AI-powered applications—from startups to enterprise innovators—who need their agents to deliver depth, personalization, and true contextual awareness. The core value proposition is clear: unlock persistent intelligence. By decoupling memory from the application logic and the LLM itself, MemMachine gives you the power to create assistants that don't just answer questions, but understand relationships, recall history, and build a lasting, meaningful connection with the user, all while offering full data control and seamless integration with your existing stack.

Features of MemMachine

Persistent & Evolving Memory

MemMachine's core engine maintains a continuous memory layer that persists across user sessions, different AI agents, and even various underlying large language models (LLMs). It doesn't just store chat history; it builds a dynamic, sophisticated user profile that learns and adapts. This means your application can recall a user's stated preferences, past challenges, interaction patterns, and important contextual details, transforming a generic chatbot into a truly personalized assistant that grows smarter with every interaction.

Multi-Platform LLM Integration

Designed for maximum flexibility in the modern AI stack, MemMachine integrates seamlessly with a wide array of AI providers and models. It works natively with OpenAI, AWS Bedrock, and local models via Ollama. Furthermore, its Model Context Protocol (MCP) server capability future-proofs your application, allowing easy integration with new and emerging LLM platforms as they become available, ensuring your memory layer is never locked into a single vendor.

Flexible Deployment & Data Control

You retain complete sovereignty over your data and infrastructure. MemMachine can be deployed locally for maximum privacy and low-latency, in your own cloud environment for scalable performance, or easily installed via pip for rapid development. This flexibility empowers teams to meet strict compliance requirements, optimize for cost and performance, and integrate memory into their architecture exactly where it's needed without being forced into a proprietary SaaS box.

Open-Source with Community Support

As a fully open-source project, MemMachine offers transparency, security, and the freedom to customize and extend its capabilities to fit unique use cases. It is backed by comprehensive documentation, a vibrant community on Discord for real-time collaboration, and an active development cycle. This ecosystem ensures you are building on a robust, peer-reviewed foundation that evolves with the collective intelligence of its users.

Use Cases of MemMachine

Personalized Healthcare Assistants

Transform patient engagement by building AI health companions that remember medical history, appointment preferences, medication schedules, and past symptoms. This enables compassionate, context-aware interactions where the assistant can proactively suggest afternoon appointments for a patient who dislikes mornings or recall specific test preparation instructions, dramatically improving care quality and patient satisfaction.

Intelligent Customer Support Agents

Move beyond scripted responses to create support agents that remember a customer's entire journey. The agent can recall past tickets, product preferences, solved issues, and even the customer's sentiment, allowing for faster resolution, personalized recommendations, and a support experience that feels genuinely attentive and reduces frustration and churn.

Context-Aware Creative & Research Co-pilots

Empower writers, researchers, and analysts with AI partners that remember their workflow. The assistant can recall key articles, stored notes, preferred writing styles, and ongoing project themes. This allows for deep, continuous collaboration where the AI provides relevant suggestions, critiques based on past feedback, and helps connect ideas across a long-term body of work.

Adaptive Learning & Coaching Platforms

Build educational tools and professional coaching agents that develop a nuanced understanding of a user's learning pace, knowledge gaps, strengths, and goals over time. The platform can adapt lesson difficulty, recommend specific resources based on past struggles, and provide encouragement tailored to the individual's journey, creating a truly personalized path to mastery.

Frequently Asked Questions

How does MemMachine differ from just using a vector database?

While MemMachine can utilize vector databases for semantic search, it is a complete memory layer with higher-level abstractions. It manages not just embeddings, but also structured entity relationships, temporal context, and user profiles. It handles the logic of what to store, when to recall it, and how to format it for the LLM, going far beyond simple vector storage to provide turnkey, intelligent memory for your application.

Is my data secure with MemMachine?

Absolutely. Data security and privacy are foundational. As an open-source tool, you can audit the code yourself. Crucially, you maintain full data control because you deploy and host MemMachine within your own infrastructure (locally or in your private cloud). Your user data never needs to be sent to a third-party memory service; it remains securely within your environment.

Can I use MemMachine with any LLM or AI model?

Yes, MemMachine is designed to be LLM-agnostic. It provides native integrations for popular platforms like OpenAI and AWS Bedrock and supports any local model running via Ollama. Its MCP (Model Context Protocol) server capability is a key feature that ensures compatibility with a broad and future set of AI models, making your memory layer universally applicable.

What is required to start implementing MemMachine?

Getting started is straightforward for developers. You can install MemMachine via pip (pip install memmachine). The project includes comprehensive documentation and examples to guide you through the initial setup, which typically involves connecting your memory store (like a database) and integrating the MemMachine client into your existing agent or application logic. The active Discord community is also a great resource for support.

You may also like:

Admanage AI

AdManage.ai helps you launch ads 10x faster. Launch instantly to Meta, TikTok, Pinterest, Google, YouTube, Snapchat, and Axon. Join top advertisers.

HarvestMyData

Extract Instagram followers' emails in minutes. Get business contacts, bios, and Linktree URLs. No proxies, no login required. $3-49 per job.